Introduction

Introduction

Not long ago, AI felt like something you tested out just to see what it could do.

In 2026, that phase is long gone.

Today, AI tools are woven into everyday life. People use them to write emails, research topics, plan projects, learn new skills, create content, manage time, and even help think through decisions. For many of us, AI has quietly become a daily companion — not flashy, not surprising, just… there when we need it.

And yet, one question keeps popping up:

Can AI be 100% trusted in 2026?

It’s an honest question. AI responds fast. It sounds confident. Sometimes it explains things better than people do. At the same time, concerns haven’t disappeared. People still worry about wrong answers, privacy issues, misinformation, and relying on AI a little too much.

This article isn’t here to scare you or sell you hype. Instead, it offers a grounded, practical look at AI trust in 2026 — how AI actually works, when it’s reliable, when it isn’t, and how to use AI tools responsibly without losing control or common sense.

Whether you use AI for work, business, learning, content creation, or everyday tasks, this guide is meant to help you think clearly about trust.

Why Trust in AI Matters More Than Ever in 2026

A few years ago, using AI was optional. Now, for many people, it feels unavoidable.

AI tools are everywhere:

-

writing and editing content

-

learning new skills

-

planning businesses

-

handling customer support

-

boosting productivity

-

automating daily tasks

When a tool starts influencing real decisions, real outcomes, and real reputations, trust stops being a side issue.

The conversation has shifted. It’s no longer:

“Wow, AI is impressive.”

It’s now:

“Is this reliable enough to depend on?”

That shift explains why searches like “can AI be trusted,” “AI reliability,” and “AI trust issues in 2026” keep growing.

What Does It Actually Mean to “Trust” AI?

This is where a lot of confusion starts.

Trusting AI does not mean:

-

believing everything it says

-

treating it like a human expert

-

letting it make decisions for you

Real trust looks different.

It means:

-

knowing what AI is good at

-

understanding where it falls short

-

using it confidently with limits

-

verifying important information

Once you stop seeing AI as an authority and start seeing it as a tool, the trust question becomes much easier to answer.

How AI Really Works (Without the Tech Jargon)

Here’s something many people forget:

AI doesn’t think.

AI doesn’t understand meaning like humans do.

AI doesn’t know what’s true or false.

AI works by:

-

analyzing massive amounts of data

-

spotting patterns

-

predicting the most likely response

That’s why AI can sound convincing while still being wrong. It explains things smoothly, even when the information is outdated, incomplete, or missing context.

Once you understand this, a lot of AI trust issues suddenly make sense.

So… Can AI Be 100% Trusted in 2026?

The honest answer?

No. AI cannot be trusted 100%.

But here’s the part people often miss:

Humans can’t be trusted 100% either.

Doctors make mistakes.

Experts disagree.

People misinterpret information every day.

The real goal isn’t perfect trust.

It’s informed trust![]()

Where AI Can Be Trusted (When Used Wisely)

AI is incredibly useful in the right situations.

Research and General Information

AI is great for:

-

summarizing topics

-

explaining complex ideas

-

organizing information

As long as you treat it as a starting point, not the final answer, it’s very effective.

Productivity and Automation

AI works well for:

-

drafting emails

-

creating outlines

-

automating repetitive tasks

These are low-risk areas where AI genuinely saves time.

Learning and Skill Support

AI helps with:

-

explanations

-

examples

-

practice and revision

When AI supports learning instead of replacing it, trust is reasonable.

Where AI Should Not Be Fully Trusted

Some areas still require extra caution.

Medical, Legal, and Financial Decisions

AI can provide general information, but it should never replace qualified professionals.

Emotional or Personal Judgment

AI doesn’t understand emotions, relationships, or long-term consequences.

Sensitive or Confidential Data

Sharing personal IDs, private documents, or business secrets remains one of the biggest AI trust risks in 2026.

Why AI Often Feels More Trustworthy Than It Is?

AI responses are usually:

-

fluent

-

confident

-

well-structured

Humans naturally associate confidence with accuracy — and that’s where blind trust starts.

Ironically, the more polished AI sounds, the more carefully its output should be checked.

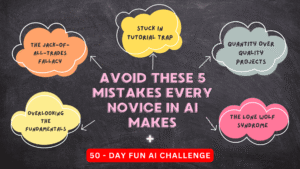

Common AI Trust Mistakes People Make

Common AI Trust Mistakes People Make

Most AI problems don’t come from bad intentions. They come from habits like:

-

trusting answers without verification

-

oversharing personal data

-

letting AI replace thinking

-

assuming AI understands intent

-

avoiding responsibility for AI-assisted decisions

These mistakes are subtle, but they add up over time.

Why Human Judgment Still Matters More Than Ever

One of the biggest misunderstandings about AI is the idea that human judgment matters less now.

In reality, it matters more.

AI can suggest.

AI can analyze.

AI can generate.

But humans decide.

Whether you’re publishing AI-assisted content, following AI-generated advice, or using AI at work, the final responsibility always stays with you. Experience, values, and common sense are what turn AI output into something trustworthy.

Two people can use the same AI tool and get completely different results — simply because one applies judgment and the other doesn’t.

Using AI Without Losing Your Human Skills

Another real concern in 2026 is skill erosion.

When AI does everything, people risk losing:

-

creativity

-

problem-solving ability

-

independent thinking

The solution isn’t avoiding AI. It’s intentional use.

Use AI to:

-

speed up drafts, not skip thinking

-

explore ideas, not replace creativity

-

learn faster, not avoid learning

The healthiest relationship with AI is collaborative. Humans bring context and ethics. AI brings speed and support.

How to Use AI Responsibly in 2026

A few simple habits make a big difference:

-

verify important information

-

protect personal and business data

-

use AI to support thinking, not replace it

-

add your own insight to AI output

-

take responsibility for decisions

Follow these, and AI becomes reliable enough to trust.

How Google Looks at AI and Trust Today

This matters, especially for website owners and creators.

Google doesn’t punish AI usage.

Google punishes unhelpful content.

Content that builds trust shows:

-

real experience

-

accuracy

-

honesty

-

responsibility

AI-assisted content that genuinely helps people aligns with EEAT principles. Content created only to manipulate rankings does not.

Final Thoughts: Trust Comes From Awareness, Not Blind Faith

So, can AI be 100% trusted in 2026?

No — and that’s perfectly fine.

AI is powerful, but power always requires awareness.

When you understand what AI does well and where it falls short, it becomes:

-

safer

-

more reliable

-

more valuable

The smartest users aren’t the ones who trust AI blindly.

They’re the ones who use AI thoughtfully, responsibly, and humanly.

That’s how AI becomes an advantage — not a risk.

“Smart Tech Tutor”

Learning technology with clarity, balance, and trust.